How to Audit Backlinks in Google Search Console: How to Fix Crawled External Links, Avoid Them, and Understand Their Impact?

Auditing Backlinks: What’s Wrong?

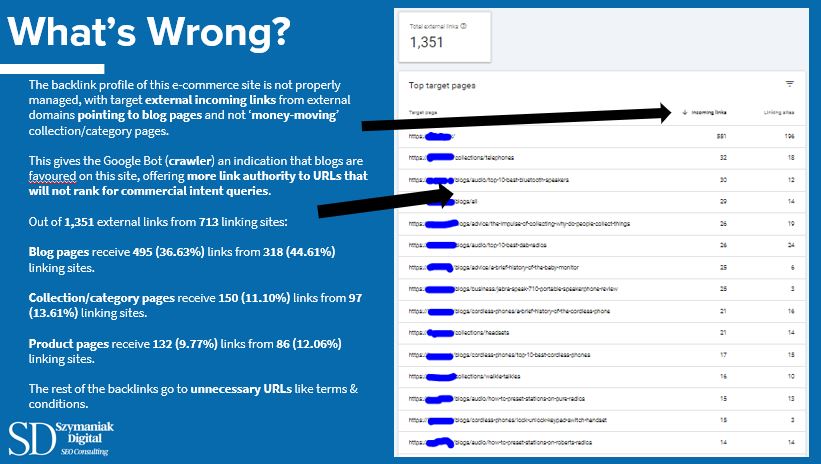

The backlink profile of this e-commerce site is not properly managed, with target incoming links from external domains pointing to blogs and not ‘money-moving’ collection/category pages.

As a result, Google’s crawler interprets the site as favouring blog content, which diverts valuable link authority away from URLs that are crucial for ranking in commercial-intent queries.

From a total of 1,351 external links across 713 linking sites:

Blog pages receive 495 links (36.63%) from 318 (44.61%) linking sites.

Collection/Category pages receive 150 links (11.10%) from 97 (13.61%) linking sites.

Product pages receive 132 links (9.77%) from 86 (12.06%) linking sites.

The remaining backlinks point to non-essential pages, such as Terms & Conditions.

A single explanation for such an issue is that the e-commerce site business decision-maker agreed to acquire backlinks from a ‘link-building agency’ without considering the technical aspects of Off-Page SEO and its effect on organic traffic.

Konrad Szymaniak (Enterprise SEO Consultant), knows there is still a lot of work to do to fix the crawling errors after deep analysis of the hierarchy of the site, keyword and visibility index drops (identified in Sistrix), and overall URL authority (backlink profile).

If you’re enjoying this article, why not explore the Bournemouth SEO consultant services offered by Konrad Szymaniak, especially if you’re based in Bournemouth?

How to recover traffic and revenue from Automated Backlink Services (link-building agencies) & How do avoid Crawler issues?

How To Fix Poor Backlink Acquisition: Auditing What Google Crawls

As shown in the image above, blog pages are outperforming collection pages, primarily due to the higher number of backlinks directed toward the blog pages instead of the collection pages.

To fix Off-Page SEO issues on e-commerce websites, it’s important to focus on the most impactful elements. Start by identifying the top-performing URLs by type and tracking the clicks of these URLs before and after the possible cause of traffic drop (in this case the automatic link-building agency started to harm the site’s traffic in February 2024).

Steps to Fix and Recover from Automated Link Building

1. Identify the blog URLs that receive the highest number of external links from the greatest number of linking sites (use Google Search Console, and any other tool like Ahrefs, or SEMrush). I recommend doing this in Bigquery if you are using GSC because you will have more data, and this is essential if you are working on large enterprise-level sites.

2. Use these blog URLs for internal linking purposes and distribute the link equity to the most important business-related and topically relevant Collections and Product URLs.

3. Use target keywords as anchor text from these blog URLs to the Collections and Product URLs.

4. Only distribute the link equity through internal links where it makes sense topically. For example, if there is a Blog URL about Motorola Radios, make sure to only link internally to Collection/Product URLs that sell Motorola Radios, other Motorola products, and other radios. This will maintain topical relevance and enhance authority for the URLs we link to in order to rank for target queries. I suggest using Screaming Frog to identify internal linking opportunities by using n-grams. This way, you can locate the ‘unlinked’ words that appear on your site. You can also choose to find n-grams within a specific section of the site by crawling a subfolder where you want to create internal links from.

5. If there are not enough products in the future or the business would like to focus on other products that these blog URLs do not talk about as much, consider using 301 (permanent) redirects to URLs the business would like to focus on, and that are somewhat topically relevant. This way, we will not lose link authority (backlinks), and the redirected URL will benefit from the improved ranking position.

Key Fixes:

- Track URL rankings and traffic for high-search volume keywords before and after fixing your backlink profile. This is where you will see changes in performance based on your input.

- Build topic clusters for money-driving keywords. Use Google Search Console and Google Analytics (GA4).

- Use internal linking as a way to spread link equity across your site (n-grams in Screaming Frog).

How to Avoid mismanaging your Backlink Profile & preventing Crawler Issues?

Before starting any traffic drop recovery, it’s crucial to take several steps to avoid major issues happening again. First, always create a full export of your data, when you exported it and what exact steps you took to clean it for the purposes of your objectives, documenting everything along the way.

You may also need a back-up of your database so that you can restore everything if needed. Test the optimisation on a personal site, Konrad Szymaniak has 10 enterprise-level websites simply made for testing, this way everything can be done in a tested environment, without using ‘best practices’ since some techniques are outdated, and false information is shared publically by Google itself.

After setting up your testing environment, ensure all files are transferred, and update click data information so that you can tell if the hypothesis is true or not. In previous consulting gigs, Konrad tested on a website in a similar industry and a similar number of URLs with similar traffic numbers, and the hypothesis of the internal linking working proved correct, meaning the optimisation implementation can start on the client site. This way Konrad made sure the client had a proven way that the optimisations could work, and would drive an effective return.

Optimise your e-commerce site’s category pages to get more backlinks to those pages instead of your blog pages by following these tips:

- Create a well-organised URL structure: A hierarchy of category URLs helps search engines understand your website’s structure, which improves SEO and usability.

- Use a linking strategy: Link related content across your site (from the blog pages with the highest number of backlinks and referring domains) to help users, as well as crawlers, navigate the site.

- Make your site easy to navigate: Keep every page three or fewer clicks away from your homepage.

- Use breadcrumbs: Breadcrumbs help users click on your site and can appear on SERP listings.

- Add category text copy: Add a detailed copy to your category pages, written almost entirely for SEO purposes.

- Use relevant and descriptive anchor texts: Write content for users, not for search engines.

- Avoid link schemes: Don’t buy or sell links or use automated link-building tools. Double-check the links that your site is receiving from a ‘link-building agency’.

- Get listed on “where to buy” pages: If you stock third-party products, check if their brands have stock list pages and pitch to be included (this includes pitching to publishers to review the products you sell on your site).

Crawled Backlinks Audit: Impact of the Fix & What to Expect?

Automated Link Building Recovery: Impact of the Fix & What to Expect?

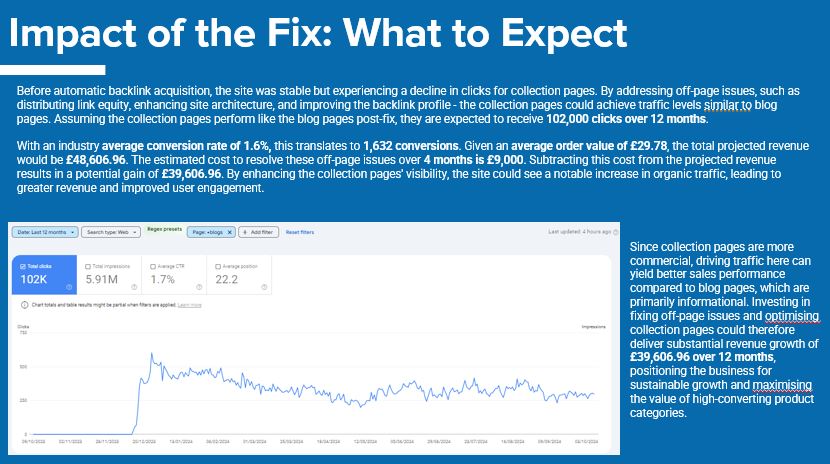

Before automatic backlink acquisition, the site was stable but experiencing a decline in clicks for collection pages. By addressing off-page issues, such as distributing link equity, enhancing site architecture, and improving the backlink profile – the collection pages could achieve traffic levels similar to blog pages. Assuming the collection pages perform like the blog pages post-fix, they are expected to receive 102,000 clicks over 12 months.

For further clarification, these projections are forecasts for the future, assuming that the Google algorithm is favouring the site. This means that the actual revenue gain projections may vary as Google changes its algorithm day by day.

With an industry average conversion rate of 1.6%, this translates to 1,632 conversions. Given an average order value of £29.78, the total projected revenue would be £48,606.96. The estimated cost to resolve these off-page issues over 4 months is £9,000. Subtracting this cost from the projected revenue results in a potential gain of £39,606.96. By enhancing the collection pages’ visibility, the site could see a notable increase in organic traffic, leading to greater revenue and improved user engagement.

Since collection pages are more commercial, driving traffic here can yield better sales performance compared to blog pages, which are primarily informational. Investing in fixing off-page issues and optimising collection pages could therefore deliver substantial revenue growth of £39,606.96 over 12 months, positioning the business for sustainable growth and maximising the value of high-converting product categories.

Crawled Backlinks Audit – Automated Link Building Recovery: Conclusion

The e-commerce site currently has an imbalanced backlink profile, with a majority of external links directing to blog pages rather than collection/category pages, undermining its potential for commercial intent rankings.

By reallocating link equity from high-performing blog pages to collection pages, the site could significantly increase its organic traffic, with an estimated 102,000 clicks and £48,606.96 in projected revenue over the next 12 months.

The estimated cost of resolving off-page crawler issues is £9,000, resulting in a potential revenue gain of £39,606.96 after expenses. This presents a strong return on investment for enhancing collection pages’ visibility.

Optimising collection pages to receive more backlinks not only improves organic traffic but also maximises the commercial value of the site, positioning the business for long-term success and improved sales performance.

If you made it this far, and are interested in chatting away with Konrad Szymaniak (Independent Enterprise SEO Consultant) about all things Backlinks, Auditing, and Crawling book a consultation with him.

FAQs

What is the best tool for backlink audit?

My favourite tool is Google Search Console because it is Google’s data and what Google bot crawls. I also recommend using Ahrefs, or SEMrush to back it up what GSC is showing.

How long does a backlink audit take?

A full enterprise-level backlink audit takes around 4 months due to the complexity of external links pointing to your site, where they point to, anchor text, and if these links harm, or benefit your traffic metrics over-time.

What are the benefits of backlink audit?

Backlink audit allows a full picture of what is being said about your site from other websites. You do not want spammy websites talking about you as you will get targeted by Google. The main benefit is seeing what needs to be done, and setting priorities for each activity, whether that is spreading link equity via internal linking strategy or removing URLs with spammy links on your site.